Oredev Conference - Highlights from Day 1 2024

I had a great time at the first day of Öredev today.

After I got my ticket, I was welcomed with this gorgeous entrance into the main conference area.

Anywho, let’s get into some of my highlights from Day 1.

Sustainable IT : Connect the Dots to a Greener Future

The first talk I attended was by Saravanan K Nagarajan.

Before this talk, I was very much in the dark on how technology affected the environment and contributed to greenhouse gas emissions. I knew that there was some sort of relationship between the two - but the extent of that relationshup was something I would’ve just had a wild guess on.

Nagaranjan shed some light on the fact that there is more computing power in our smartphones than in the computers used to send spaceships to the moon.

More fun facts: The tech sector is repsonsible for 2-3% of global greenhouse gas emissions. The aviation section is responisible for 2%.

Sit on that.

He shared that since more businesses are moving to the cloud, there is less spend on physical infrastructure.

Nagaranjan recommended some useful resources for people to look into this topic more including:

- United Nations Act Now

- Green Software Foundation

- Carbon Hack - hackathon organised by the Green Software Foundation.

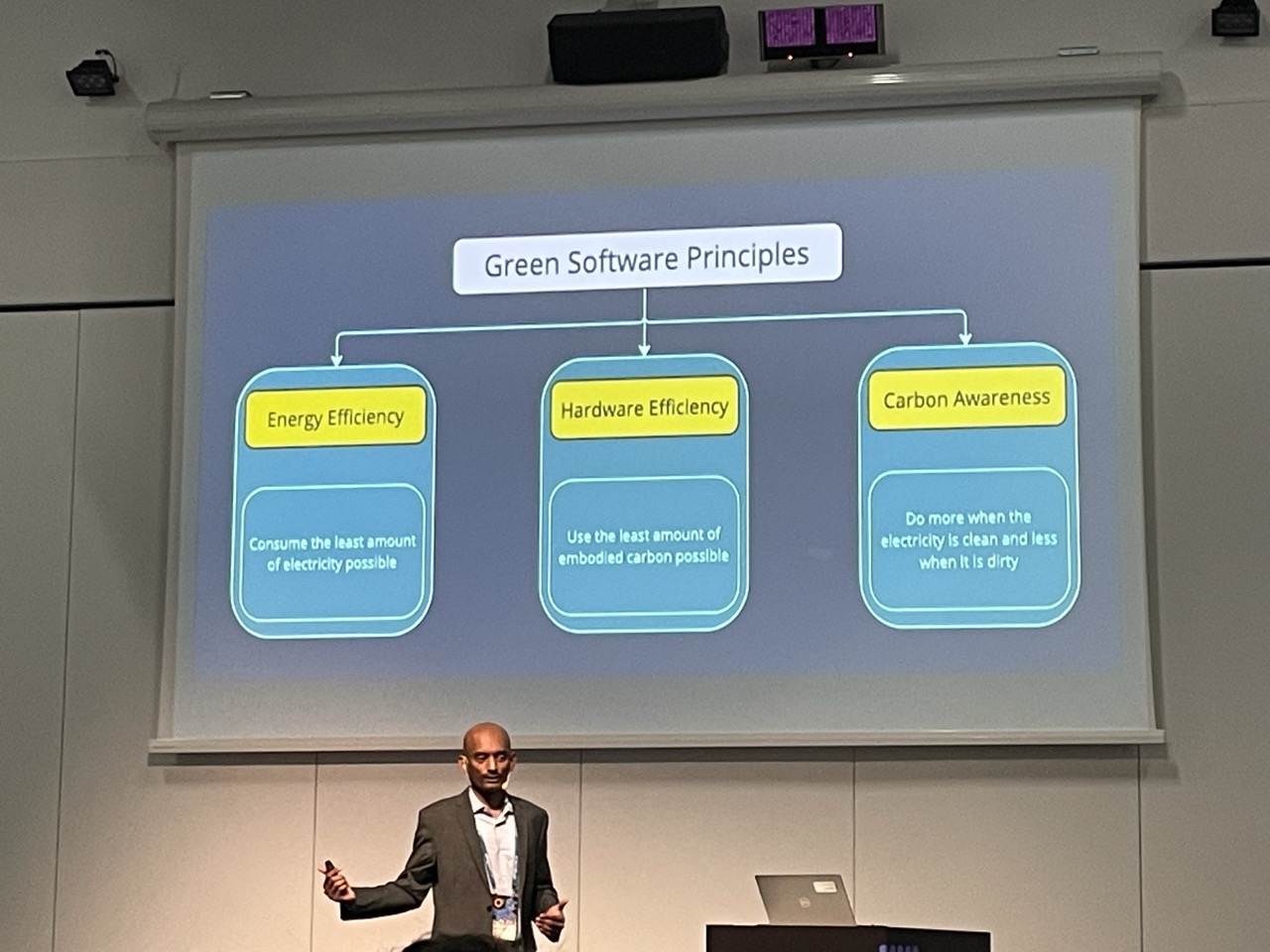

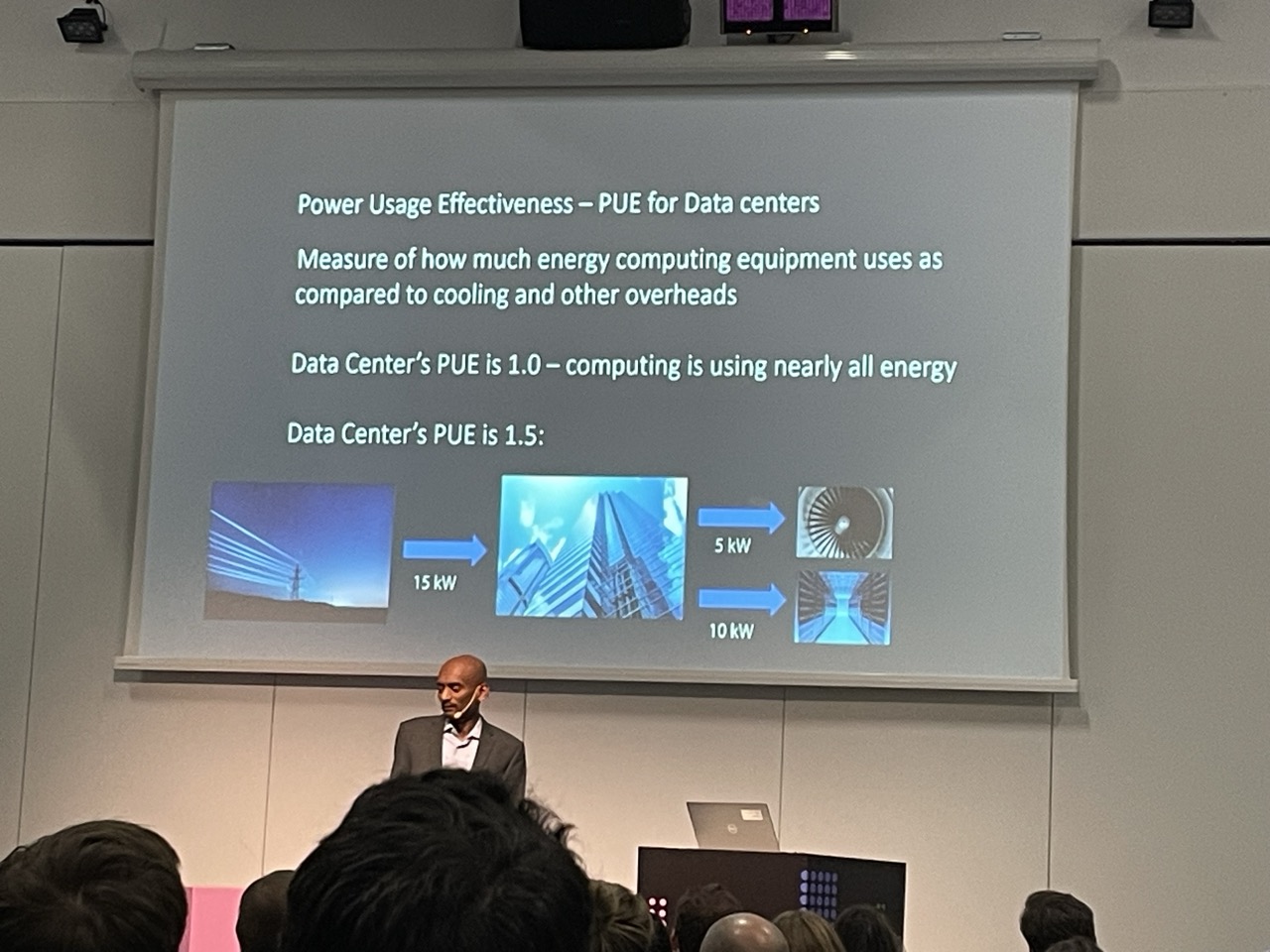

About halfway into the talk I got tired of taking notes so ended up just taking photos. Here are some below:

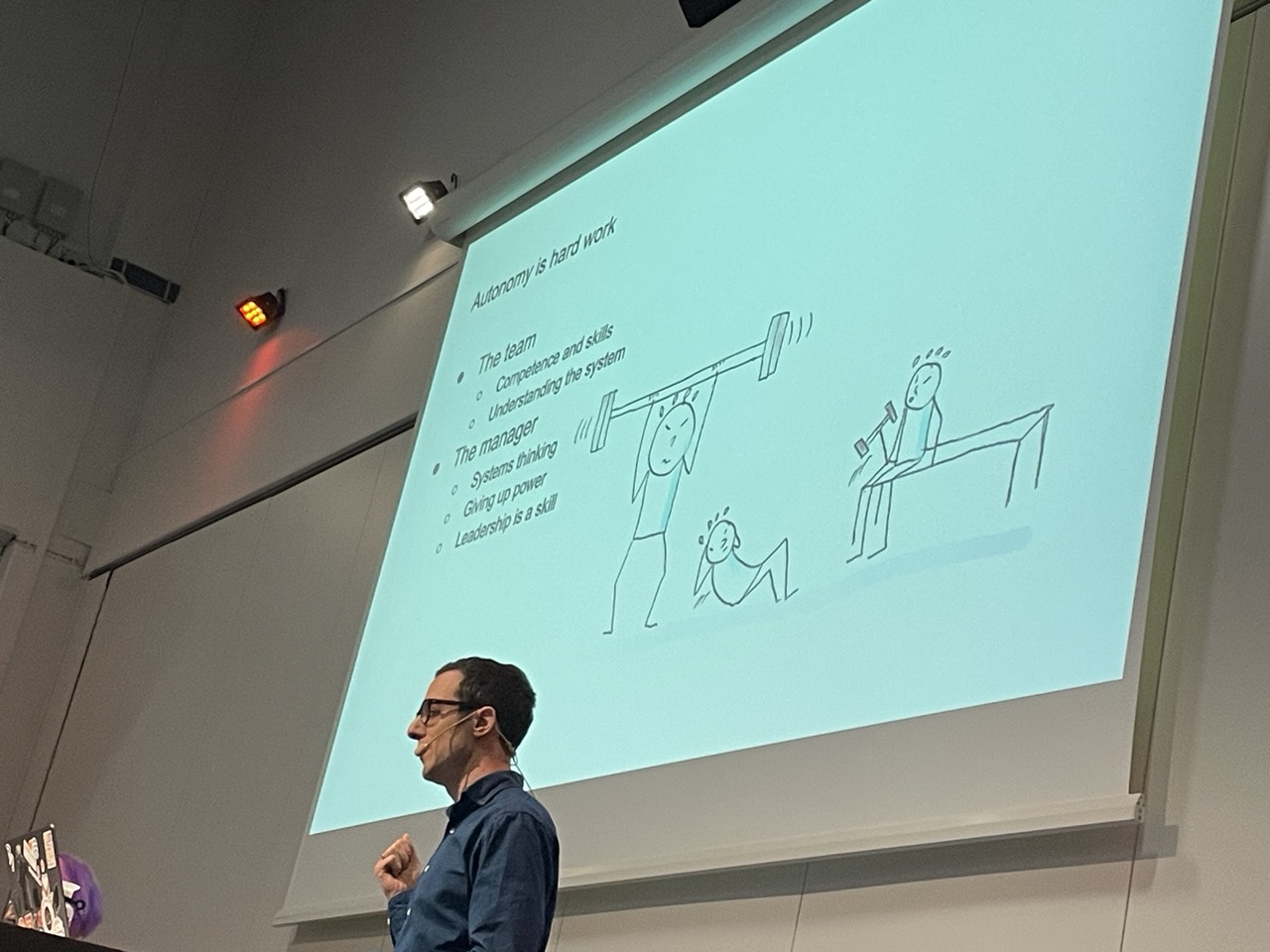

Autonomous teams require great managers

Next up was a talk by Gitte Klitgaard and Jakob Wolman to a fully packed room. I spent the 40ish min on the floor and it seemed at least 40-50 other people also didn’t have seats - it was that popular.

There were so many gems in this talk.

Here’s what I learned:

3 basic elements for a team to become autonomous successfully:

- team who is willing to be autonomous

- Manager with good management skills (manager sets frames, directions and supports people. Helps the team work in an autonomous way)

- (Do they have the skills to do these things? Do they have the time? (They=team))

- System. The system around the team need to support this approach

Typical problem areas (that can make this difficult):

- often a problem with leadership. Sometimes managers micromanage. Sometimes managers are nice but not kind (eg. Don’t give feedback when needed).

- Hierarchy ( dealing with abstraction levels. Managers need to funnel information down into the team). People don’t always know how to deal with/receive “strategy language”

- Alignment. (Eg. Tech silos between teams. Different tech stacks in the org. Team members couldn’t help each other). Frames help give teams alignment. Teams have autonomy within these frames.

3 types of leaders: The first two types are heroic style.

- Expert (became a leader because they were a great dev)

- Achiever (evolution of expert. “We need to motivate the team to achieve these goals")

- Catalyst (they focus on the system, on facilitating, coaching. More emphasis on collaboration. Going for the multiplier effect. They focus more on the long term. They equip the team to make decisions on their own)

Here’s the thing, the heroic leaders tend to be more visible to higher leadership. These leaders are more likely to be promoted as a result. Catalyst more likely to stay “low level”.

Manager needs to make sure the right people are on the team and the wrong people are off the team.

Things to look out for as a team - if you want autonomy: Signs:

- micromanagement

- Forced accountability

- Lack of support

- Decisions are overruled (don’t offer the team to make a choice if they don’t actually have a choice) Should you do something?

- It depends

- Do you care enough?

- Is it worth the investment?

- A bad system beats a good team

Beyond Code: The Essential AI Skillsets for Development Teams

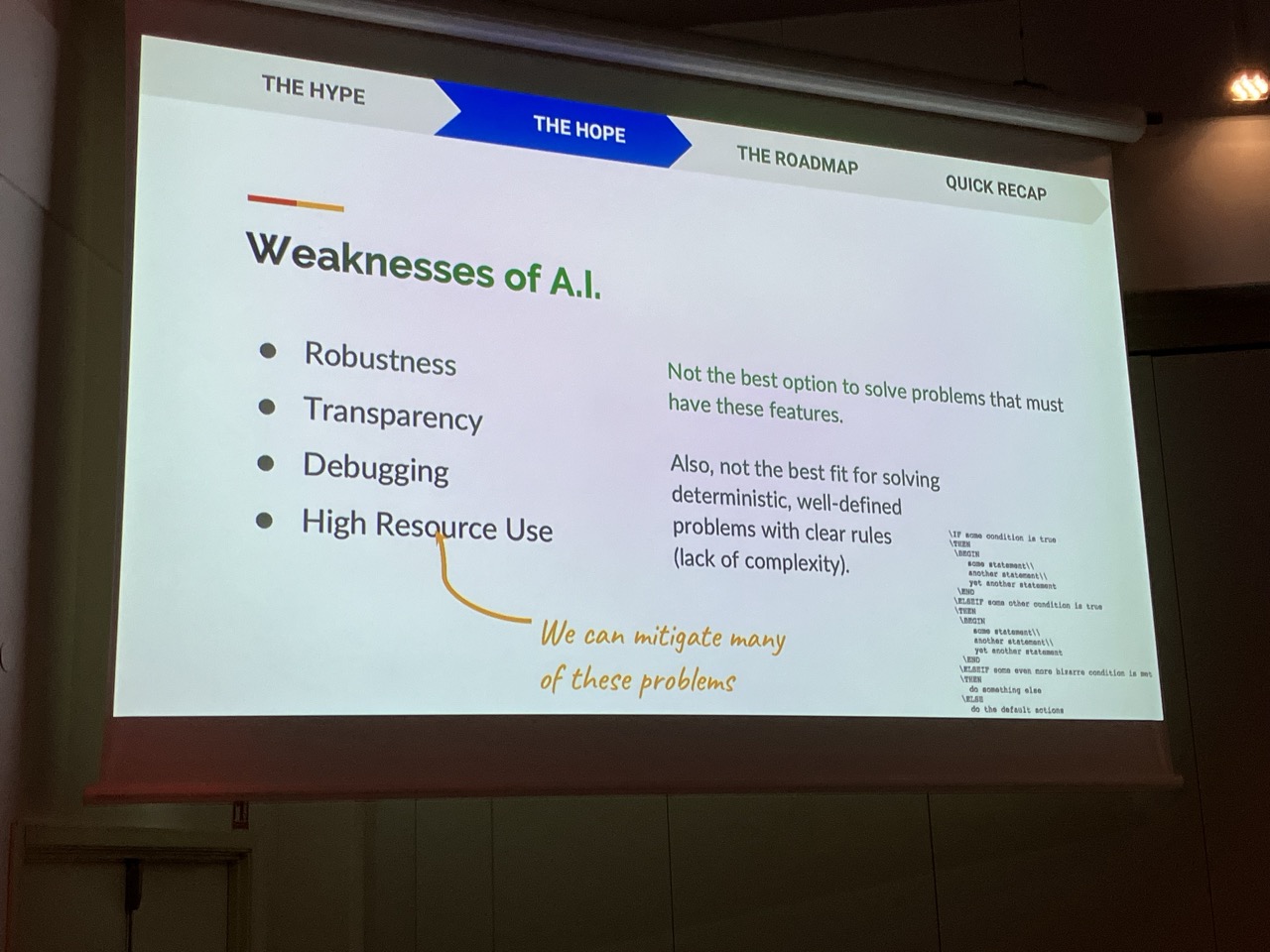

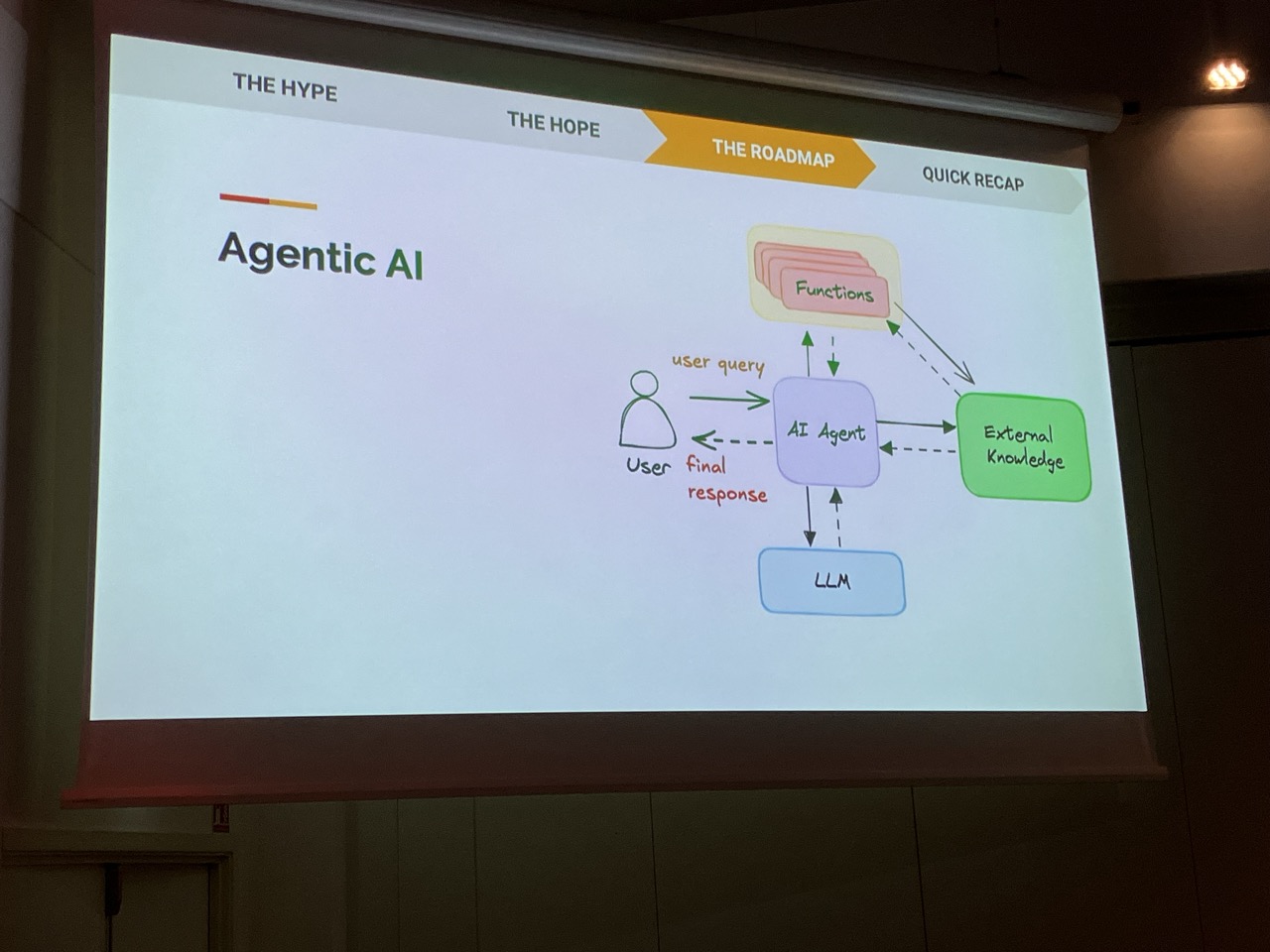

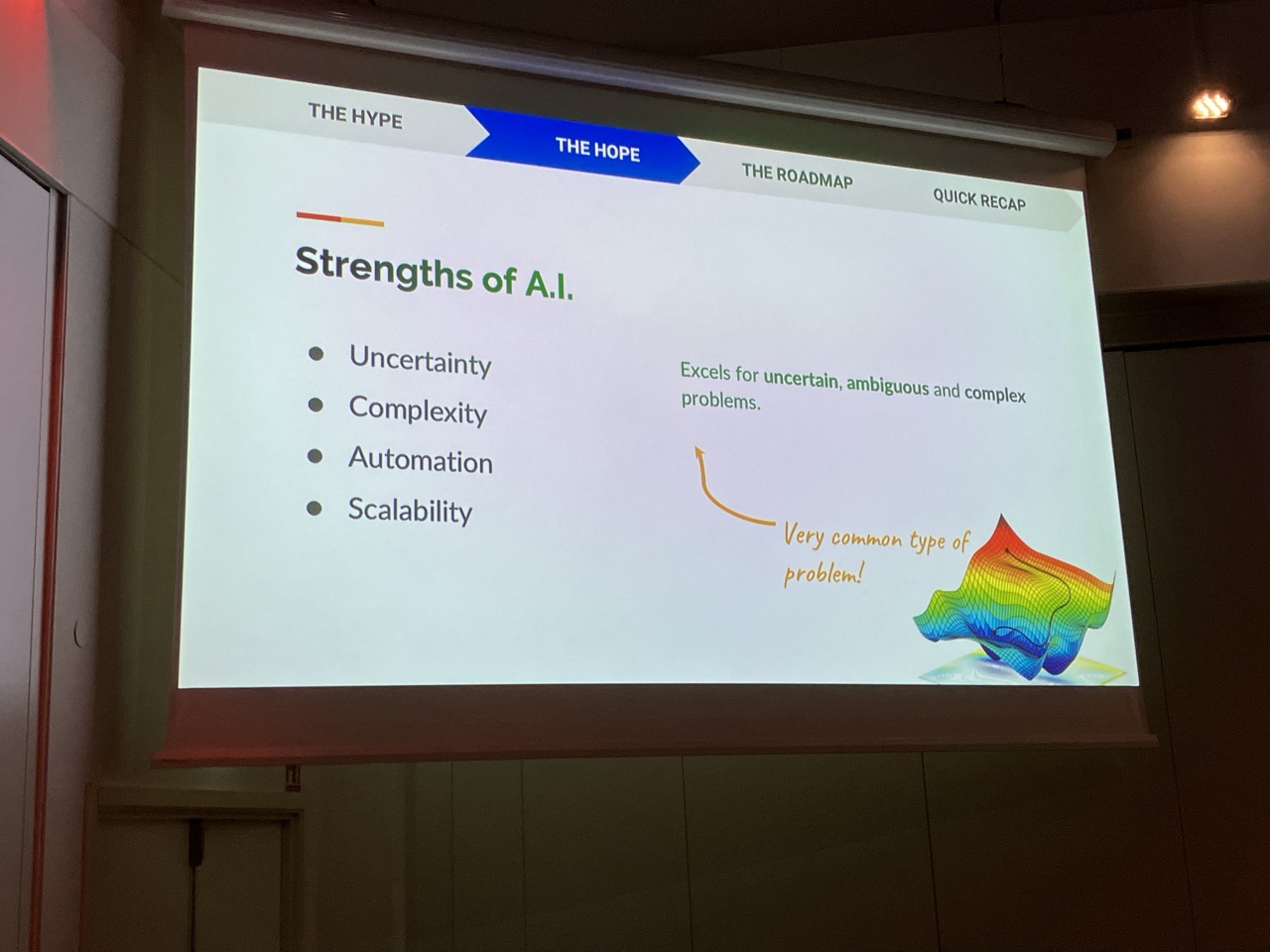

My main takeaway from this talk by Serg Masis is to not worry about AI taking out jobs but think mainly about how we can use AI to assist us in our work.

He also shared that there is a tendency to not trust AI with high risk tasks (which lines up with what I have been reading in Deep Medicine, even though the results in some areas of AI in medicine do look promising). My understanding is that there is a desire to maintain some sort of human control or ability to override the machines (if needed, because, ya know, iRobot and all).

Some interesting resources he shared in his talk included:

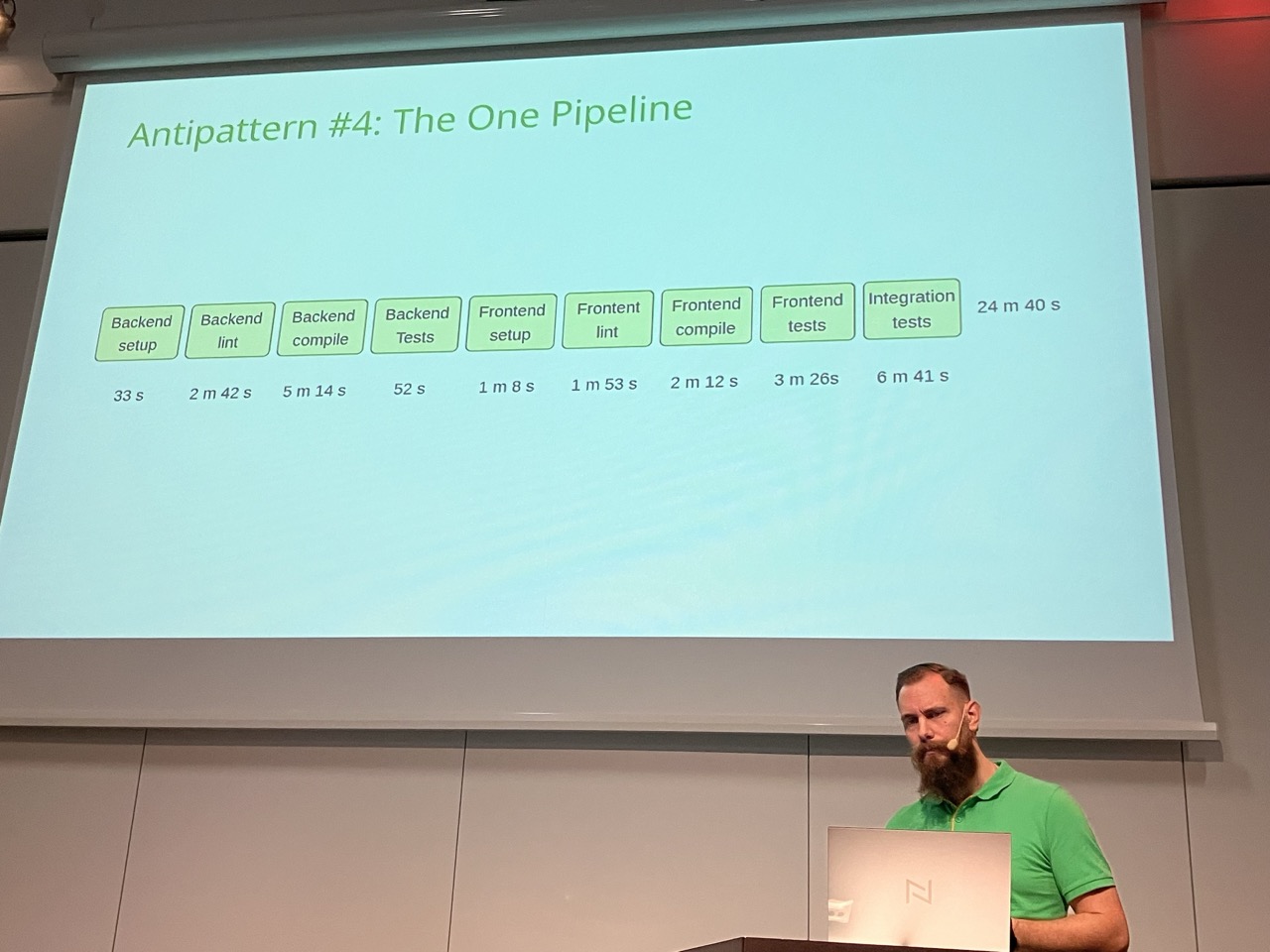

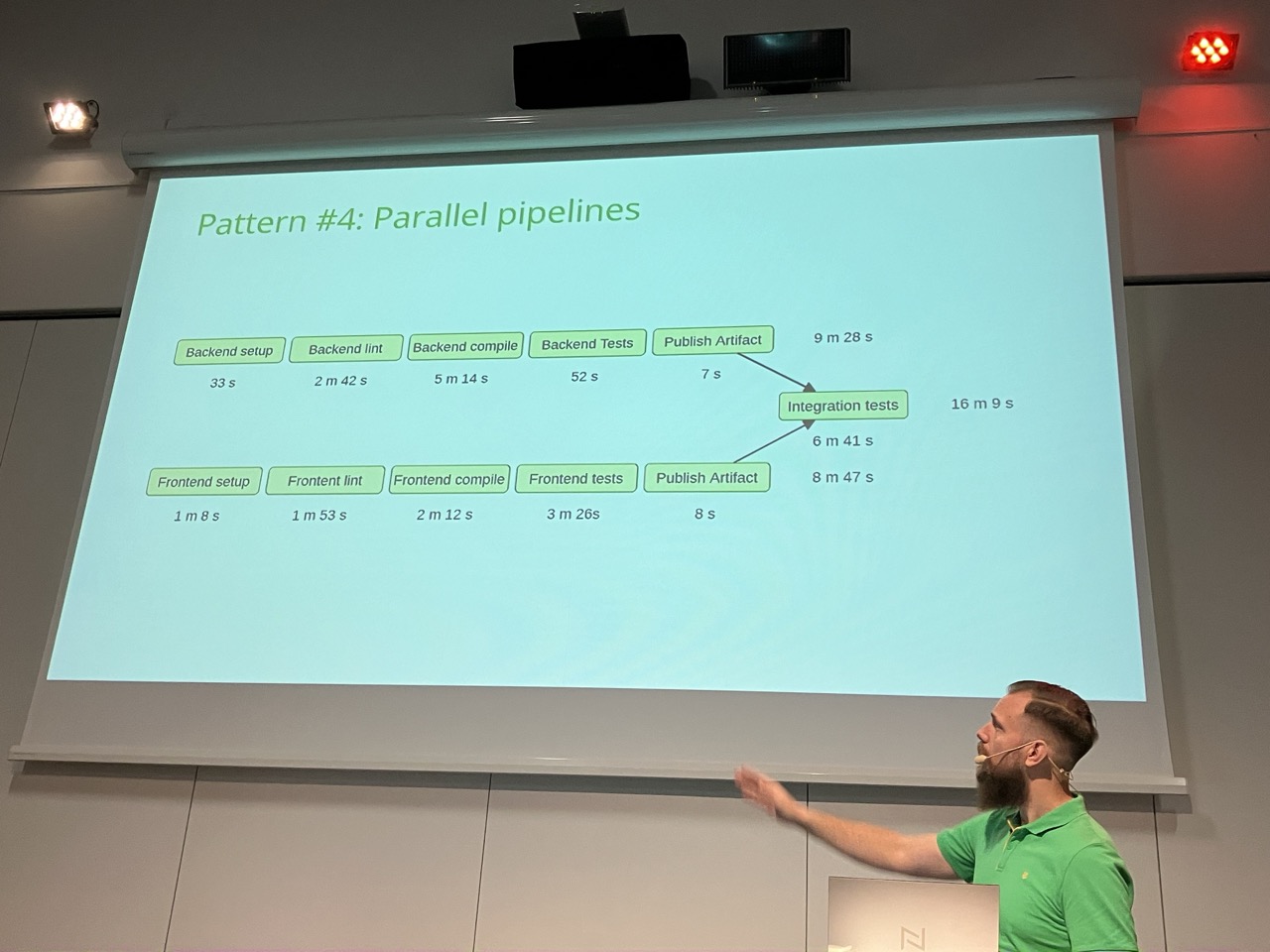

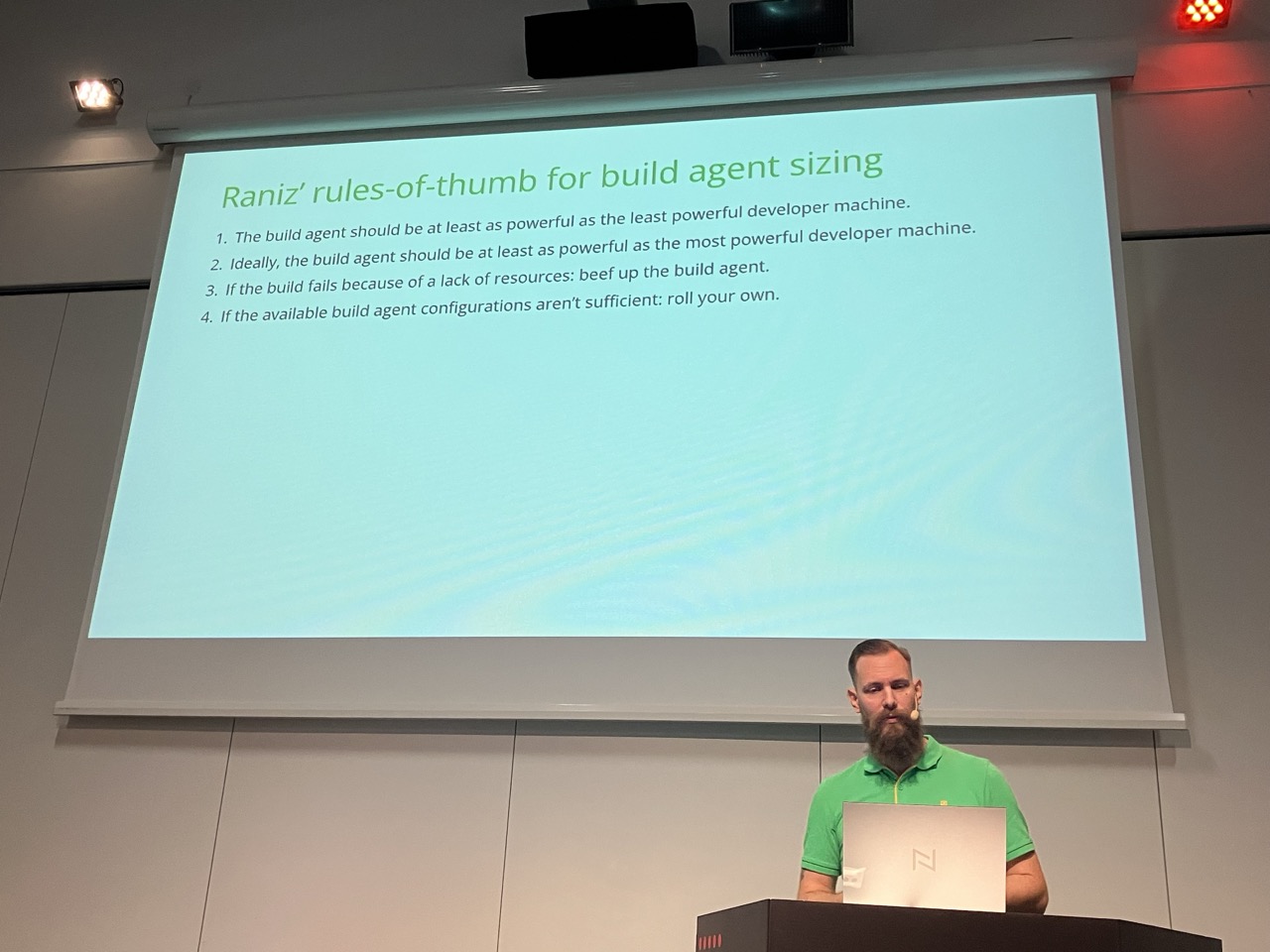

Pipeline Patterns and Antipatterns - Things your Pipeline Should (Not) Do

Daniel Raniz Raneland shared some guidance on what we should not be doing when it comes to our pipelines, and fortunately, also guidance on what we could do instead.

I came to this talk, to see how (if?) my team could work better with pipelines. I also spoke about this talk a fair bit with my better half at home, and he seemed happy to hear that his company are doing all the right things when I told him what he should be doing (“we are already doing that”. “we are already doing that too”).

Anywho, it was nice to bounce ideas off my husband when I got home because it helped me cement what I learned (and unfortunately I barely took any notes I just snapped away).

Some pipelines tools that Raneland is now looking into: